Trulia’s platform team is excited to announce the company’s first open-source serverless project: cidr-house-rules. Cidr-house-rules will help any team using multiple Amazon Web Services accounts build more dynamic DevOps tooling, think: data-driven Terraform modules.

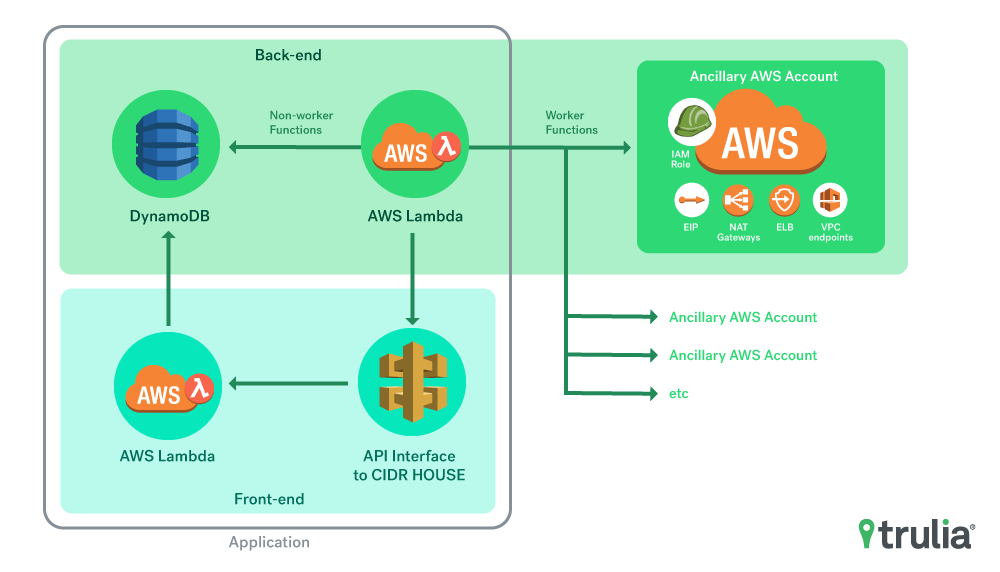

Cidr-house-rules is a lightweight API and collection system that centralizes important AWS resource information across multiple AWS accounts. It is all set up and driven by serverless technologies provided by AWS, which include Lambda, API Gateway, Dynamodb, and IAM Roles. This application provides the ability to create and use data-driven Terraform modules.

In other words, cidr-house-rules is a DevOps tool that will pull information from multiple AWS accounts and provide an interface around this data for other applications to leverage.

Why Trulia Built This System

At Trulia, we have multiple AWS accounts and we needed a way to manage network access between all the accounts. We chose to use AWS Security Groups because it allows us to define restricted access between the services that need to communicate with each other. In order to do this via Security Groups, we needed a better way to track our Elastic IPs, NAT Gateways, and other important networking-related information. This would enable us to create the appropriate Security Groups for access between microservices.

In some cases where VPC Peering wasn’t an option, more dynamic Security Groups became important. In addition to managing the network access between accounts, we were also interested in being able to proactively detect potential VPC cidr block overlaps and avoid them if possible. We also found the need to start tracking usage of AWS VPC Private Link endpoints across our AWS accounts.

To achieve the goals listed above, we needed a new tool. So, we set out to build one. Enter: cidr-house-rules.

Overview of cidr-house-rules:

We built cidr-house-rules to run from a central AWS account and leverage Amazon’s AssumeRole capabilities. This is done by having the Lambda functions in cidr-house-rules assume a role in each AWS account that you would like to track. The role is created in the ancillary account and has a minimal read-only policy associated with it. This is the function that provides that ability and is imported into the Lambdas that run.

def establish_role(acct):

sts_connection = boto3.client('sts')

acct_b = sts_connection.assume_role(

RoleArn="arn:aws:iam::{}:role/role_cidr_house".format(acct),

RoleSessionName="cross_acct_lambda"

)

d = json.dumps(acct_b, cls=DateTimeEncoder)

jsonn = json.loads(d)

ACCESS_KEY = jsonn['Credentials']['AccessKeyId']

SECRET_KEY = jsonn['Credentials']['SecretAccessKey']

SESSION_TOKEN = jsonn['Credentials']['SessionToken']

return ACCESS_KEY, SECRET_KEY, SESSION_TOKEN

To make it work, all we need is a simple role applied on any account we want cidr-house-rules to work with. We created a simple Terraform module that helped roll this out to our accounts and also shared this on Github here.

We then leverage Jenkins to deploy cidr-house-rules, and run a development and production environment. If we make a change to the code it can not only have “`unittest“` applied via our tests and TravisCI build, but we can compare end results in our dev vs. prod Dynamodb tables. They should be identical with regards to the number of items in each table.

Core Functionality

The cidr-house-rules import functions (import_cidrs.py, import_eips.py, import_nat_gateways.py, (available_ips.py) are Lambda functions that run on a schedule to bring in important network-related information from across all accounts and all regions. The api_handlers.py function is where we place our ‘http’ triggered events to return data to clients. A couple example returns include:

get_elbs_for_all – Return an array of ELBs (v2 and classic)

get_nat_gateways_for_team – Return a comma separated string list of EIPs for a given team.

check_conflict – Look up a given cidr block and see if there is a conflict with another used cidr block.

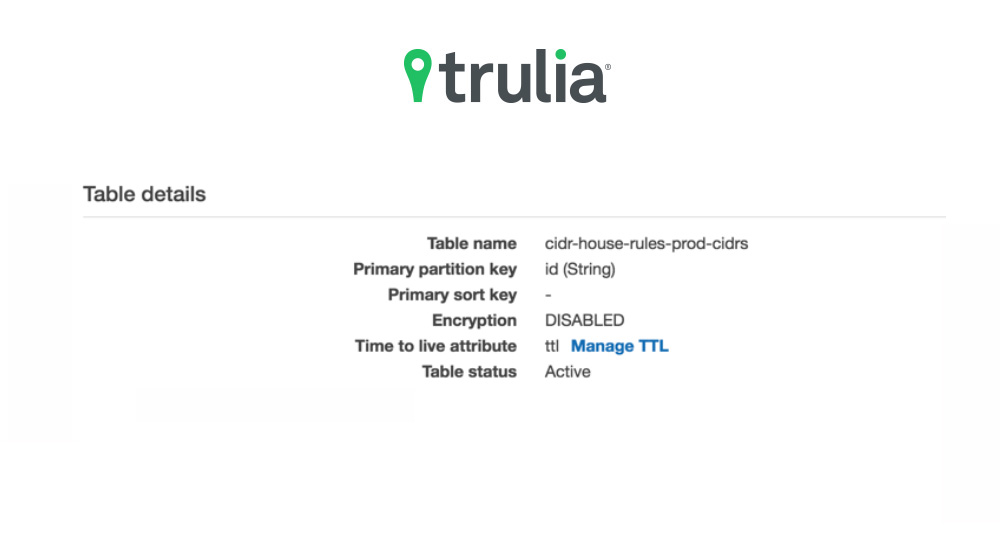

Handling Old Data

Since the time-to-live (TTL) feature of DynamoDB wasn’t available at the start of this project, we initially went with a more direct pruning method to remove resources that have been deleted on accounts. Pruning was done in a programmatic way using Python. The programmatic method scanned through each account, the same way it does when adding new resources and built a list of the resources. Once the resources were scanned on the account, it compared the list of resources just gathered with a list of resources stored in DynamoDB. The resources left behind from the comparison were then aged out of DynamoDB by iterating over the list and using “delete_item.” We decided to switch over to using a TTL method of removing old data from Dynamodb. TTL pruning is achieved by enabling “manage TTL” on the table and adding a TTL attribute. With this enabled on the appropriate tables we no longer needed the programmatic method of pruning.

Table with TTL attribute enabled:

Monitoring

For monitoring our AWS lambda functions we went with serverless-plugin-aws-alerts. This allowed us to configure AWS CloudWatch alarms which hooked into AWS SNS. From the SNS endpoint from which we could subscribe to via email to receive alerts. We decided to configure alerts on fuctionErrors and functionThrottles. These configurations give us visibility into any possible issues with our functions:

alarms: - functionErrors - functionThrottles

Using cidr-house-rules

The primary consumer of the cidr-house-rules API are Trulia’s data-driven Terraform modules. These modules leverage the HTTP data source, which is configured in the Terraform module code to ask for information from cidr-house-rules API endpoints. We saw the HTTP data source provider as sort of a hidden gem in Terraform. Data source gives you the power to configure dynamic data-driven Terraform modules that change depending on HTTP response input. This works great for us at Trulia with cidr-house-rules. We don’t need to statically configure IP addresses within our modules. We will be open sourcing some of the Terraform modules that we use in conjunction with cidr-house-rules soon.

Challenges

We found testing can be a challenge with a serverless app. We ended up starting to add a unittest that leverage this handy library: https://github.com/spulec/moto. This library abstracts away a lot of the set up for testing, which is nice, and we look forward to writing some more tests with this library.

This type of use case, where we call AWS Lambda functions on a controlled scheduled basis, means we can easily predict cost and utilization. Running this service costs Trulia under $50 per month for both a dev and prod environment. Your mileage may vary depending on the number of accounts needing to be monitored.

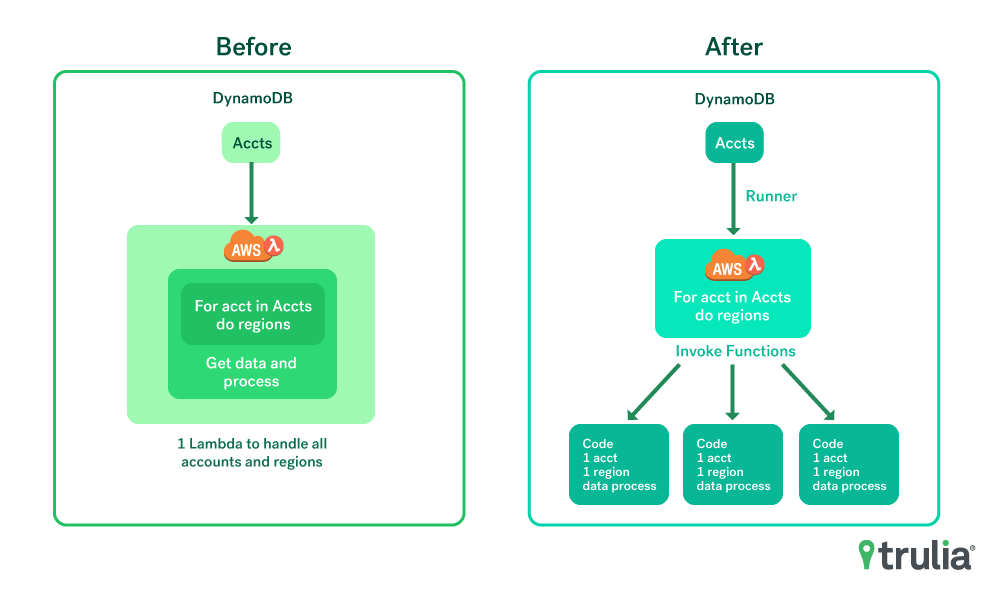

We ran into a function scaling issue with our initial design of cidr-house-rules. The functions would handle looping over every managed account and subsequent region when invoked (See diagram 1). This works fine until you have about a dozen or so AWS accounts. At this point the max timeout for a Lambda function (5 minutes) is hit and import functions start timing out. Our fix in this PR https://github.com/trulia/cidr-house-rules/pull/17 put a “runner” function in place that would invoke asynchronously any number of import functions needed to handle an infinite number of managed AWS accounts and regions. The following diagram shows the before and after of this scaling challenge.

Scaling challenge:

def invoke_process(fuction_name, account_id, region):

"""Launch target fuction_name on account_id and region

"""

invoke_payload = (

json.JSONEncoder().encode(

{

"account": account_id,

"region": region

}

)

)

lambda_client.invoke(

FunctionName=fuction_name,

InvocationType='Event',

Payload=invoke_payload,

)

Benefits

We gained a handful of benefits by implementing this system.

- Cost effective solution to providing a source of truth for information that is vital for cross-account communication

- Visibility into our infrastructure across multiple AWS accounts

- An API interface that works with Terraform

- A scalable Serverless application

Conclusion

Cidr-houes-rules was fun to build with our team, and leveraging serverless on AWS definitely saved us time by speeding up the development and release process. As a result, we now worry less about the infrastructure and can focus more on the application.

We think this project will be useful to those with multiple AWS accounts and the need to dynamically update infrastructure code. If you try it out, let us know what you think.